Our Content

Research-driven builders and investors in the cybernetic economy

We contributed to Lido DAO, P2P.org, =nil; Foundation, DRPC, Neutron and invested into 150+ projects

Towards the Cybereconomy

This article envisions a cybereconomy driven by peer-to-peer networks, programmable markets, and AI, aimed at benefiting humanity.

First part: path to decentralized AGI. Second part: social, economic, and technical problems with AI. Third part: building blocks of decentralized AI.

Watch a brief video presentation of this article (7min)

TL;DR

What?

AI is advancing rapidly. AGI is coming. This is the largest technological wave impacting society.

So what?

It leads to risks such as the centralization of power and ownership by a limited number of entities, censorship, manipulation, regulatory control, unequal distribution of technology, and politically biased AI.

Now what?

Cryptography, distributed systems, machine learning, and cryptoeconomic networks can enhance AI systems. This increases competition, accelerates innovation, and makes AI more fair, accessible, explainable, and useful. Let’s explore how exactly 👇

Introduction

AI is booming. Internet-scale data, highly parallelizable deep neural network architectures and compute scaling laws led to incredible progress in the field of AI.

AI and autonomous agents are augmenting humans and our existing economy. They extend our capabilities and mind's reach, facilitate coordination, and enhance productivity.

However, precisely now we need to ask some fundamental questions about what the post-AGI world will look like. How can we ensure that it remains unbiased, uncensored, and not controlled by a single or very few entities? Do we want AGI to be a single omnipotent black box running from OpenAI bunker datacenter? Or will it be a trillion of interconnected models and agents owned, controlled and developed by thousands of contributors?

Is AGI a single gigantic model or a network of networks of billions of intelligent agents, pre-trained models, fine-tunes, datasets and tools built collectively by an open community of developers?

How can we ensure healthy competition, innovation and safety in AI that will lead to prosperity and abundance for all?

web3 + Software 3.0

Chris Dixon famously came up with the definition of web3 as a new iteration of internet with ability for users to read, write and own digital content and assets. Different phases of the internet's evolution, each characterized by distinct technologies, user experiences, and paradigms for interaction and data management.

Web 1.0 consisted mainly of static webpages where users were primarily content consumers.

Web 2.0 introduced an era of interactivity, social networking, and user-generated content.

web3 represents the next phase of the internet, focusing on decentralization, privacy and user sovereignty. Web3 aims to give users control over their own data, identity, and digital assets.

A similar evolution is happening in the software programming paradigms. Traditionally, software development is where programmers write code to govern the behavior of the software. Software 2.0, a term coined by Andrej Karpathy from OpenAI and Tesla, is an approach that was made possible by advancements in machine learning and deep neural networks in particular.

And now we’re entering the age of Software 3.0 where systems of autonomous agents powered by AI will be able to write code, interact with external tools, improve themselves and participate in the peer-to-peer digital economy interacting with human users and other autonomous agents. Here’s how this evolution looks like:

Software 1.0 produces a deterministic algorithm that can be compiled and run on any computer. Users then run the program to receive a predictable result.

Software 2.0 takes in training data and produces a pre-trained model in the form of weights of a neural network. Users can then input their data, such as text or images, into the neural network and receive an output. Typically, in the form of probability distribution.

Software 3.0 is represented by an autonomous AI agent that takes in instructions from the user in any form (e.g. natural language, voice, video), and performs a set of actions and decisions to achieve the desired result. Such an agent can call external APIs, write and execute code, interact with other agents and even build new neural networks.

AI agents with web3 capabilities to own, transact and autonomously act on behalf of their users is the next iteration in networking and autonomous systems technology that we call a cybernetic economy. We believe that in the future, such networks of autonomous agents (and not just a single model) with economic capabilities will form AGI, an AI capable of completing tasks at least as good as humans.

Evolution of Autonomous agents

One of the most exciting use cases of AI is autonomous agents. Think of them as a multitude of personal assistants that are knowledgeable, tireless and always available to help you out. They can help you plan your trip, learn new skills, get medical consultations, write software, optimize taxes, or simply have some fun with NPCs in the virtual world.

Autonomous agent is an intelligent system capable of achieving goals and making decisions without explicit instructions. It can also improve itself along the way. It could be a simple chatbot pretending to be your favorite movie character or a complex system with dynamic model routing, fine-tuned models, long-term memory and ability to use external applications or even perform physical actions.

Most agents today are simple scripts that use chains of LLMs (with the help of libraries like LangChain, TaskWeaver, and LlamaIndex) and external tools via function calling. Production grade agents are mostly the same concept but heavily optimized with heuristics, prompting and retrieval augmented generation (RAG) data for increased reliability.

Agents in the future will get more autonomy and reliability. Partially this will happen with improvements in existing ML models, but a lot of value will also come from building digitally-native economies for autonomous agents.

Cybernetic economics

As Ralph Merkle famously put it: Bitcoin is the first example of a new form of life. Since the first publication in early 2016, we now see many more new forms of life, just like Bitcoin but more specialized and solving a larger array of problems.

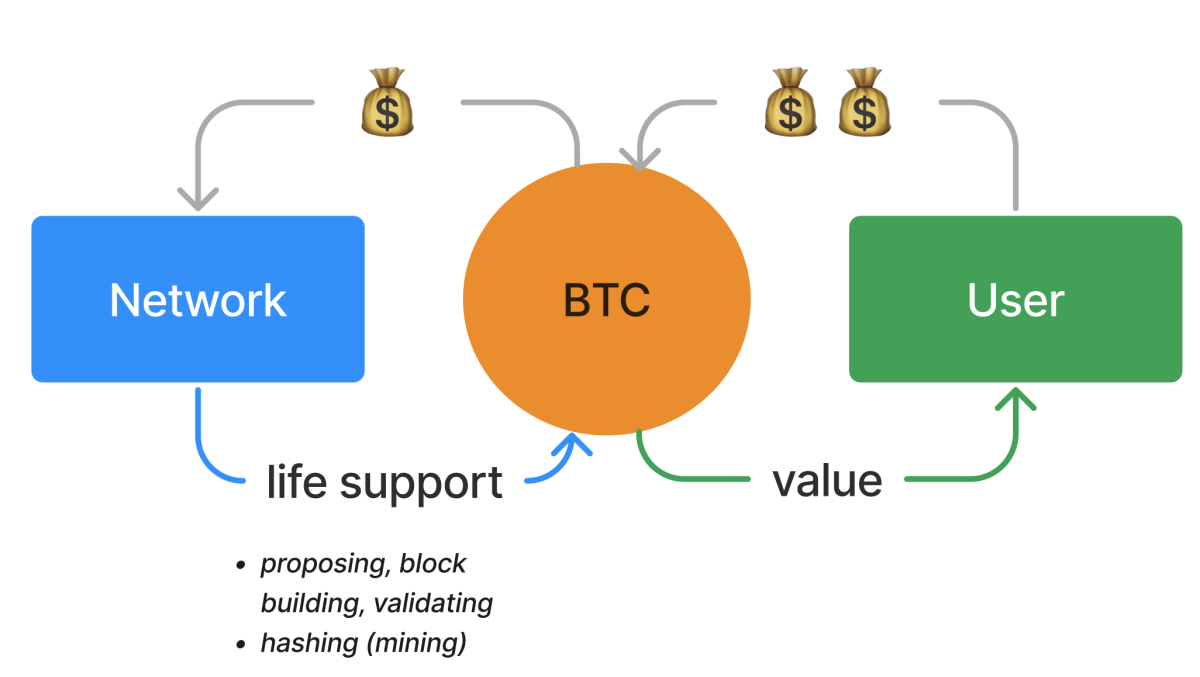

Blockchain is a software that produces valuable work for humanity and pays people who maintain it. It provides a way for individuals to contribute to the network and be rewarded for their efforts. By incentivizing participation, blockchain enables the creation of a decentralized ecosystem where responsibilities are distributed among a network of participants. This distributed approach ensures that the system continues to function even if individual nodes or participants go offline.

Anyone who wants to create their own new digital life form can do so. Like Bitcoin, it will live on the internet. Like Bitcoin, it will survive as long as it does something that people will pay for.

This quote from the Merkle’s paper applies not only to blockchains with primarily financial use cases but also to autonomous agents.

Similar to that, the autonomous agent completes valuable work for people, receives compensation for its services, and uses funds to acquire resources and/or enhance its capabilities. This agent plays a crucial role in assisting people and continuously improves its performance through resource allocation.

In the case of blockchain, the “life support” of a network primarily consists of block mining/validation and creation of open source software, for AI agents there’s a broader list of work that network can do to improve agent’s performance.

Composability

Open source leads to faster innovation because collaboration is not limited by organizational boundaries, allowing for a diverse range of perspectives and expertise to be integrated into the development process.

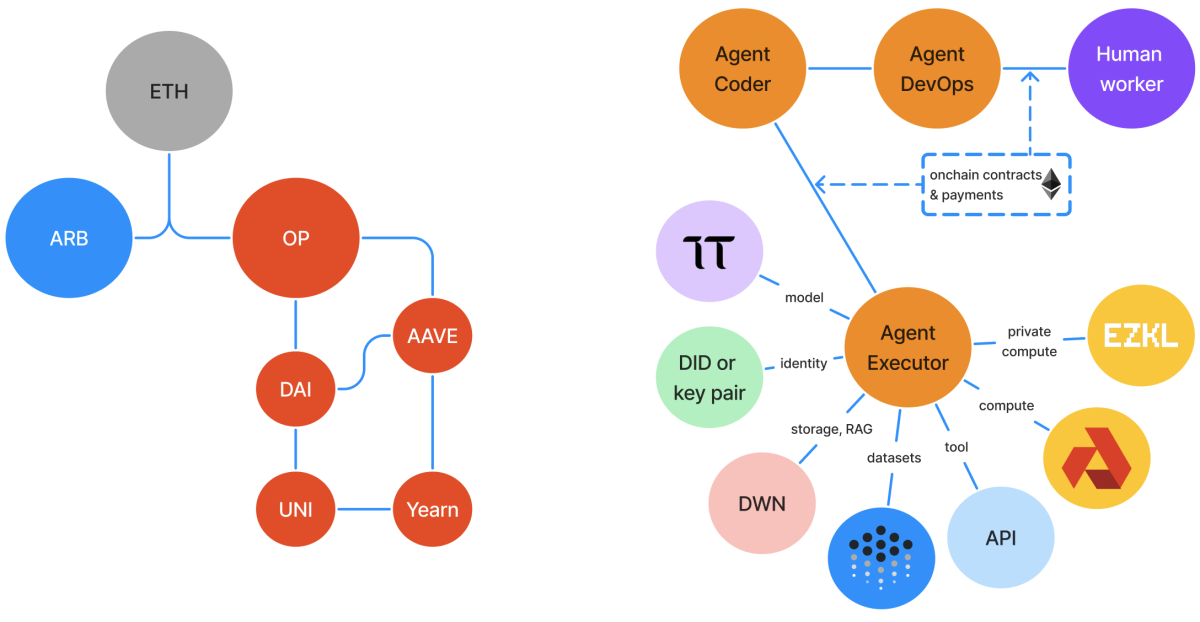

For example, Ethereum emerged as the most composable blockchain among all: Optimism is a layer 2 rollup solution built on top of the Ethereum layer 1 network. On top of Optimism, there is a MakerDAO contract that implements the DAI stablecoin. Aave utilizes DAI to build its money market. Yearn Finance provides self-custodial money management strategies that leverage Aave platform, which relies on DAI, which in turn relies on Optimism, which ultimately relies on ETH. All of this happens in a permisionless manner where protocol don’t need a formal contract leverage each other’s tech as opposed to traditional financial market.

Decentralized agents are expected to outperform centralized ones largely due to the similar reason: access to a wide range of constantly improving models, tools, and skills. They have an identity and wallet that allows them to enter into contractual relationships, such as purchasing data access, agreeing to pay royalties in the future, or sharing revenue with code contributors. They can pay for compute resources on an open market, similar to the concept of gas in blockchain networks.

To get an idea of what the cybereconomy can look like, imagine a landing page builder agent:

The agent is paid in cryptocurrency by a company that needs a new website.

The agent shares a portion of its revenue with other protocols that power it

It uses a pre-trained model that is decentralized and accessible through Bittensor Subnet 6. This model was trained using a dataset purchased from a permissionless marketplace called Ocean Protocol.

The model runs on a decentralized compute network powered by Akash.

The agent has an on-chain identity, wallet, and reputation score. Thus it can be discovered through the marketplace with some trust score associated to it.

The agent is capable of calling third-party APIs to test, run, and deploy code.

It can even pay humans to perform tasks that require human intelligence, such as solving a CAPTCHA.

Fast forward a few years and we can expect a network that consists of thousands or maybe billions of AI agents that can create long chains of relationships between agents and people to accomplish non-trivial tasks. A long chain of highly specialized agents are more like to complete the task, compared to a large foundational model.

Thanks to the inherent liquidity and composability of web3, autonomous agents have the ability to not only function as standalone products but also participate in contractual relationships with other agents. One can argue that autonomous agents can only thrive in permissionless and open environments, otherwise they are hindered by bureaucratic workflows. Rights to agents’ revenue (equity) can be tokenized and sold onchain. They also have the flexibility to switch some of the underlying infrastructure, such as tools or models (unlike GPTs). For instance, they can replace a foundational model for code generation with a better one of fly using the result of decentralized evaluation or benchmarking.

Contrast this with the Web 2.0 world, where autonomous agents:

have to be created by companies, which takes time and has significant bureaucratic overhead

have to rely on very few centralized model providers like Google or OpenAI, risking market cannibalization or censorship

offer no data privacy guarantees to their customers

lock customer data within the provider's system

require customers to sign up for annoying recurring payment plans.

Importance of decentralized AGI

AI is already producing trillions of dollars of value today by augmenting personal lives and business processes. It is already increasing the rate of technological progress and will continue to do so on a larger scale.

Today, most of the attention and capital is going towards very few companies, such as OpenAI, Anthropic, Meta and Google. It would be unfair to say that they are not producing amazing research and building great AI propducts.

However, we believe that AGI will come not from a single huge black-box model but rather from the experience of billions of AI agents interacting with humans and each other, and accumulating “tacit knowledge” (as Tyler Cowen put it), skills and real-worlds capabilities.

In this part I review risks and potential problem caused by the centralized nature of AI development.

Centralization of power

Centralization of power leads to monopolies, stagnation and censorship.

We do not want to live in a world where, within a few years, there are only three AI models controlled by three corporations or governments. We do not want decisions regarding what these models can or cannot say to be made behind closed doors.

We do not want to be limited to using AI assistants that are strongly biased towards any specific political standpoint. An LLM reflects the political views of its creators. In today's world, it seems like we have to choose between big tech or authoritarian governments. Even in open weights models, we don't know how it was trained and what biases it may have.

Futhermore, centralized API access carries the risk of experiencing downtime or being banned by the API provider. This can result in your business or a service you depend on becoming unavailable at the most inconvenient time.

Centralization of infrastructure

Today, infrastructure required to build AI models and applications is in hand of a very few gatekeepers. Supply chain for AI chips in controlled by a few companies. Training data is owned by a handful of IT giants.

Data lock-in

Popular centralized platform with user-generated content, such as Reddit and Twitter, start blocking access to user data via API. Even though that content was creates and belongs to the users, platforms decide on who and how can access it.

Manipulation, deceiving users and subtle control over what you think, buy or say is a much more acute and real problem in AI than robots ending the world. Chatbots will know you very well and will have enough intelligence to seed a thought in your head without you even noticing. Do we want black-box LLMs that are being secretly tuned or user-owned AI?

Regulation

“Regulation is a friend of the incumbent” as Bill Gurley put it in his famous talk. Regulatory capture in AI creates a risk of actually reducing competition and slowing down innovation. CEOs of large AI companies rush to meet with government officials of many countries. Primarily because this becomes their competitive moat, not because open source AI is in any way or form worse than centralized one.

Corporation are driven by the need to maximize shareholder returns. This means that regulatory capture of AI, not user self-sovereignty, in the underlying incentive for them. Closed-source lead to a control by a small group of people, who decide what's good for others. At least Stable Diffusion is not generating factually incorrect images for the sake of meeting “diversity KPIs”, like Gemini does.

Regulators are also too slow to react and often lag behind the actual technology development by many years. In the ever-accelerating speed of technological progress, traditional approaches to regulation simply fail to keep up. A single large actor, such as the government, is unable to adequately create conditions for progress and market self-organization.

Governance by a few

In most models today (even open weights ones) very few people decide on what goes into the training set for the model (therefore, what it actually learns). Same people decide what the model is allowed or not allowed to say. In the near future (within months), this could potentially lead to ideological manipulation or even outright propaganda. It it unrealistic to expect that highly censored, red-teamed and/or biased models will not exist. But does it have to be the only option available to humanity?

Privacy and control

As we trust more sensitive data about ourselves and our businesses to AI, we don’t want all that data to end up in a single data center controlled by Google or Meta. Preserving data sovereignty and privacy becomes essential when we trust AI agent our most intimate information. AI-friend, coach, doctor and accountant will collectively know you better than anyone else. Therefore, the value of securing this data will only grow.

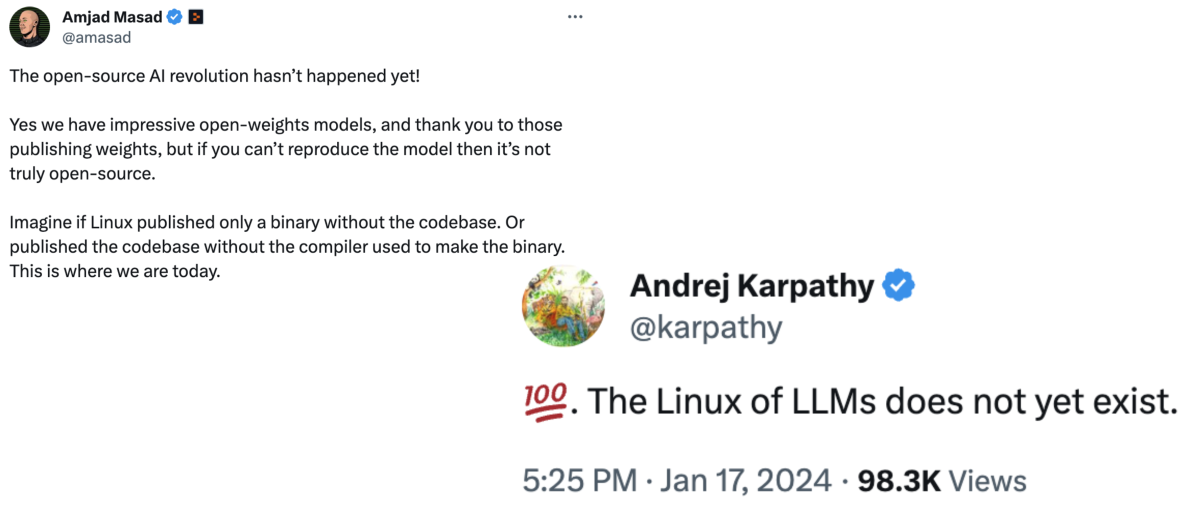

Pace of innovation

Open source, free from corporate or government control, has spurred significant technical innovation over the past few decades. However, unlike traditional open source projects, AI development requires significant capital. While you can build Linux in your basement, training models with 200 billion parameters requires thousands of enterprise-grade GPUs. This is where programmable incentives, also known as blockchains, play a crucial role. More details on this will be covered in the next section.

The peer-to-peer (P2P) economy offers a solution by accelerating competition and idea validation. In an open market and open source format, individuals can formulate and test hypotheses, receive feedback, and access resources for the most promising ideas at a faster pace. In essence, it speeds up the capitalist system and introduces the potential for AI to solve many of humanity's problems.

AI Safety

Conflict between Elon Musk and OpenAI shed light onto thinking of the company’s founders on the AI safety and open-source AI problem.

Google’s unofficial motto for a few decades was “Don’t be evil”. This moral principle expects internal teams to make the right decision but as startups like Google grew to trillion-dollar public corporations this got lost in overwhelming bureaucracy and misaligned incentives of thousands of stakeholders.

In contrast, Bitcoin pioneered the "Can't be evil" approach. It means, that anyone it free to make any kind of transaction in the network but consensus rules will prohibit any illegal or malicious actions without any centralized regulatory control or bureaucratic procedures.

The problem of AI safety remains acute and unsolved. We cannot guarantee that powerful centralized players (corporations or nation states) will do everything to ensure safety of their most powerful ML models. In fact, they have strong incentives to keep that models for themselves to pursue own political agenda or beat competition. Neither we can expect that no one in the open source community won’t try and misuse or abuse powerful AI.

Fundamentally, this is problem of negative externalities, also known as Moloch. It can partially be solved with strict regulation and control but at the expense of all risks described above. However, I believe that a much more robust approach is to build new coordination mechanisms that leverage decentralized reputation, cryptography, mechanism design and trustless paradigm. Also, radical transparency instead of lobbyism behind closed doors. I also believe that existing, pre-AGI, AI systems will play an important role in building such institutions. More on this is the next part.

An Open Future

In a world where we increasingly communicate with and rely on AI companions, it is important to recognize that uncensorable and not centrally controlled AI should be considered a fundamental human right, similar to free speech.

Why should millions of AI agents and applications rely on just a few models built by big tech firms, without any visibility into how they have been trained or improved over time? Not building decentralized AI means surrendering the way we think, act and live in the future to politicians and the tech behemoths.

It is today, with the way AI systems are architected, we have the opportunity to determine who will own, control, and have the ability to align the AGI systems of tomorrow.

Building Decentralized AI

As discussed in the previous part, decentralization is an extremely important property in AI. It enables a higher rate of innovation and helps defend against single points of failure, such as political control and censorhip, corporate governance and biased black box models, or regulatory obstacles preventing innovation.

Decentralized systems are challenging to build, but they are much more resilient to external events or attacks. Below I provide an overview of the building blocks for decentralized AI.

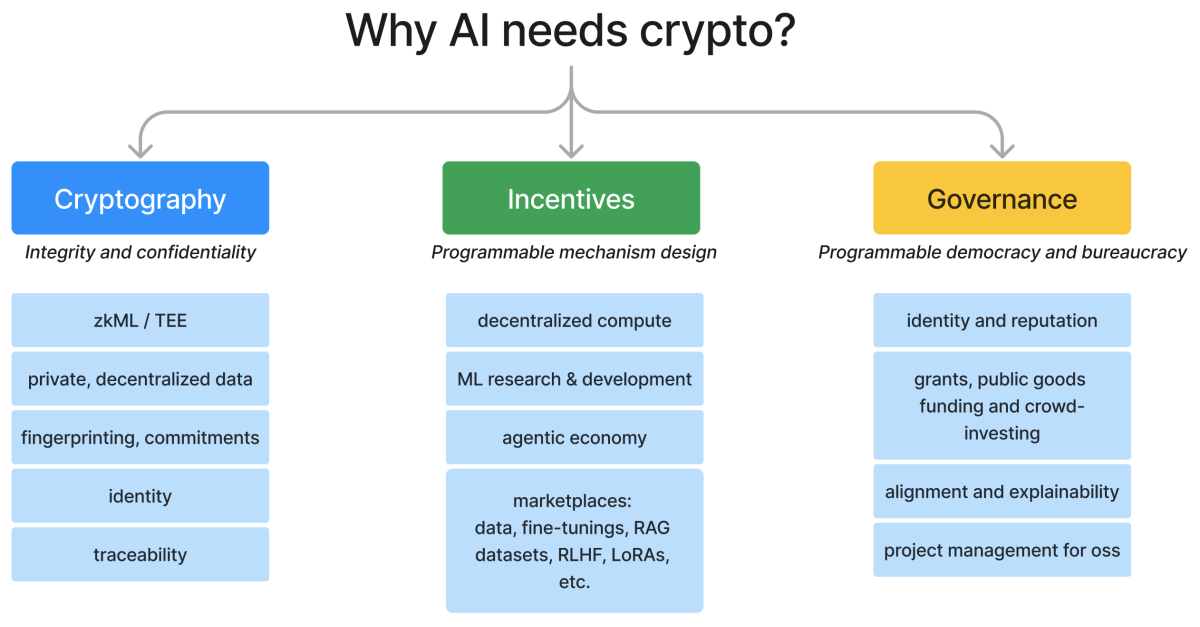

Many existing decentralized systems are referred to as cryptoeconomies because they rely on two main components:

Cryptography, which ensures the authenticity, confidentiality, and integrity of data, identity, and computation.

Programmable economic mechanisms, which efficiently pool and distribute incentives and coordinate work within a peer-to-peer environment, as opposed to the hierarchical top-down approach of centralized organizations.

Governance, including alignment, grants, oss project management

Towards the AGI

As we transition from using ML models directly through small wrappers (such as ChatGPT, Midjourney, Google Bard), to utilizing autonomous agents, and ultimately to networks of interoperating economy of agents, the value and cost-effectiveness of these systems will increase. Just as human civilization has grown not solely due to individual intellectual capabilities, but rather as a result of social and economic networks, artificial intelligence will also benefit from specialization and competition.

However, the development of an (ideally, decentralized) infrastructure will also be necessary to meet the requirements of these new cybernetic systems:

In this section, I will provide an overview of the core components necessary to build decentralized networks of AI-powered agents. For each component, I will also provide examples of existing companies or products that are currently developing solutions. These examples do not imply endorsement.

Open AGI

We believe that true AGI won’t be a single foundational model. Rather, just like human brain and human societies, it will consist of many specialized systems, all seamlessly working with each other. To achieve that, we need a protocol. Just like Bitcoin and Ethereum it has to be trustless and not owned by a single party. Just like in web3, anyone can contribute: pre-train new models, create fine-tunings, contribute RAG datasets, RLHF work, evaluation benchmarks, tools (think python functions that AI agent can call).

The more complex the task is, the more important agent chaining becomes. One prompt won’t cut it if you want AI to help you organize a large conference. AutoGPT is a fun experiment but it can’t produce more than just believable log of actions. A thousand of specialized applications, models, carefully crafted prompt, agent skills (such as writing and executing code) will be able to do that: some of them tailored for planning or marketing activities, others can pay people to do IRL work ad control the execution, onchain agents can charge customers and pay to suppliers… This list goes on to reflect and eventually automate all the steps that people do today to achieve the same result.

Privacy and control

As we increasingly rely on AI agents in both our personal and business lives, ensuring the privacy of data and computation becomes of utmost importance. Recent advancements in zero-knowledge, FHE (fully homomorphic encryption), and self-sovereign identity technologies have made it possible to create a fully private, decentralized, and user-controlled ML stack.

Zero-knowledge Machine Learning

Zero-knowledge, as a technological enabler of provably private computation, can also be applied to machine learning. This has several benefits, such as the ability to perform inference on private data or making an ML model publicly available without revealing its code and weights. EZKL, Giza Tech and Modulus Labs are developing zero-knowledge machine learning stack for immediate practical applications in web3. Today, zkML is not scalable to run large models, such as transformers, in zk format but even simpler ML algorithms like logistic regression, decision trees and random forests can significantly increase the design space for trustless applicaions.

Trusted execution environments and confidential computing

Another approach to achieve very similar level of privacy guarantees but with slightly higher security assumptions (namely, trusting hardware manufacturing) can be achieved using trusted execution environments / enclaves technology for ML tasks. NVIDIA rolled out support for confidential computing in all its H100 products and plans to support this technology in the future. Super Protocol is building a decentralized network for confidential computing, including ML training and inference, among other use cases.

Personal data storage and Decentralized Web Nodes

AI agents require both private computation and access to a significant amount of personal data. This includes communication history, financial and legal information, and details about our personal lives. This data can be utilized to improve the overall accuracy of models, or it can serve as a long-term memory in the form of RAG. To ensure privacy, not only computation but also data must be sufficiently decentralized and controlled by the user. Technologies like Decentralized Web Nodes provide this capability. One example of a user-facing application with fully private yet distributed data storage is Kin.

Incentives Design

Blockchains are systems for programmable incentives. They allow us to build programmable networks of reward distribution and increase the design space of mechanism design. This technology can be used to create decentralized incentives for research and development work in ML.

For example, Bittensor is a peer-to-peer network for collaboration on machine learning models and AI services. Validators are responsible for verifying miners' work and distributing rewards across subnets and individual miners. The network is designed to generate the best possible solution to the machine learning task defined in the subnet code. Both miners and validators receive an equal share of the rewards, which can reach up to $150,000 per day for each top-performing node (at the time of writing).

Decentralized networks and organizations offer equitable compensation to individuals engaged in machine learning research and engineering, without the need to adhere to strict corporate guidelines or be bound by rigid hierarchies. Turns out printing your own money (token) can help bootstrap supply side of the network. But long-term it will inevitably have to come up with a token value accrual model.

Marketplaces

Decentralized networks are great for building permisionless marketplaces with efficient economics and simple participation. From financial markets on top of Bitcoin and Ethereum to very specialized ones that are required to power the agentic AI economy of the future.

Models and fine-tunings

There’s extensive work happening in the field of creating open, incentivized and peer-to-peer marketplaces for neural network models and fine-tunings in academia, decentralized networks, open source community and cloud providers.

Data

Data is essential for AI systems, as it is necessary for training and continuously improving these systems. Decentralizing data collection, licensing, labeling, and sales offers economic efficiency by outsourcing these tasks. Furthermore, it improves the quality of data by allowing experts in specific fields to collect and curate specialized datasets, rather than relying solely on generic AI companies.

Ocean has built a data marketpace for buying and selling of data assets for AI developers. Grass is building a data scraping and ETL marketplace with hundreds of thousands of data providers. Sapien is solving the problem of data labelling via crowdsourcing the tasks.

Compute

Just like data, compute is an essential part of any AI system as it is required for training and inference. In addition to traditional cloud ML providers like Lambda, Runpod, and Replicate, we are witnessing explosive growth in decentralized compute networks. These networks enable the utilization of underutilized hardware, including consumer devices, to meet the ever-growing demand for AI compute. For example, Akash is an open network that enables users to buy and sell computing resources. Gensyn is focusing on more specialized job of training machine learning models with verifiable compute.

I expect that in the near future we will also see decentralized marketplaces for pluggable RAG datasets, agents themselves as well as connectors, tools (ability to call and execute code) and skills (pluggable workflows for agents) designed for specific autonomous agent standards.

Reputation and Mechanism Design

Building marketplaces as open networks is hard. Many blockchain projects and decentralized AI initiatives failed due to inability to find game-theoretic equilibrium. In a decentralized network, any opportunity to gain a higher payout through dishonest behavior will be immediately exploited by the network participants. It can come in a form of blockchain MEV, Sybil attacks, spam, hacking, social engineering or, for example, running inference of a smaller and computationally cheaper model (e.g. Mistral-7B) while charging for a larger, more performant models (e.g. Llama-2-70B).

Payments and Communication

For AI to represent the real economy, there needs to be a common protocol for communication between AI agents. Networks like Morpheus and Autonolas provide examples of agent discovery and communication, along with a decentralized runtime for them.

Due to the dynamic nature of AI requests along with the variable cost of AI responses, the implementation of AI payments is non-trivial. What's required to scale the promise of AI and associated commerce is a payments protocol that manages this complexity, analogous to the emergence a digital payments solutions required to scale commerce on the internet. Nevermined is developing a payments protocol to facilitate the agent-to-agent AI economy.

Identity and Authenticity

In a world where the majority of content online is AI-generated, authenticity becomes a valuable resource. This includes digitally fingerprinting model output, which proves that specific content was produced by a particular type and version of the model. With the rise of deepfakes, zk cryptography can be used to verify genuine content. Prediction markets will be utilized to determine the truthfulness of content, including those generated by AI.

Identity and authenticity are increasingly crucial for both AI agents and real individuals. Technologies such as decentralized identifiers and zero-knowledge attestations can help address the issue of fraudulent or spam content in a decentralized paradigm, as opposed to a centrally permissioned one.

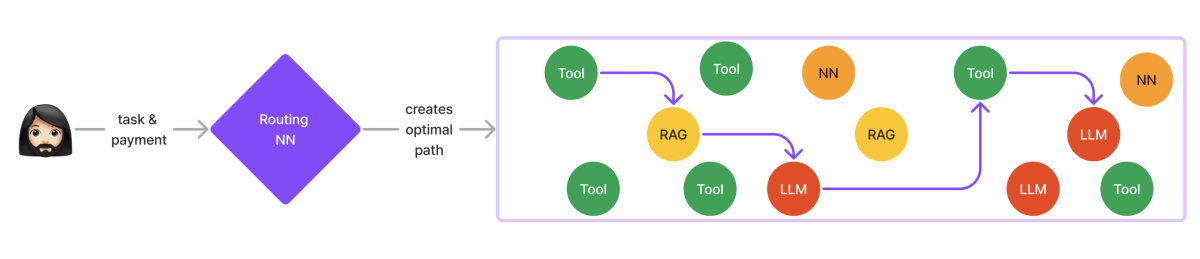

Routing

To complete a truly useful tasks (e.g. organizing a conference, making a video, teaching a child, filing for residency, or legal actions) there has to exist an efficient routing algorithm that orchestrates the most optimal ensemble of agents. This will require at least two decentralized components:

scoring or evaluation algorithm that will constantly update predicted loss of a model, agent or tool against specific task,

and a routing framework that will generate a chain or ensemble of agents that will work (sometimes in parallel, sometime sequentially) to complete the task.

Commitment Devices

Blockchains are credible commitment devices. These devices can be useful in cases where centralized systems for enforcing or incentivizing cooperation fail, as they do not require a trusted third party. Commitment devices help enable credible multi-agent coordination.

There is extensive work being done to build the foundations for formal contracting in multi-agent systems. The goal is to reduce collusion and negative externalities that arise from the lack of cooperation between independent AI systems.

For a virtual agent, it is much more natural to use code and cryptographic signatures, similar to the traditional legal framework for humans, to achieve higher efficiency and cooperation in the market. The use of contracting by agents would promote better social outcomes for the cybereconomy in general.

Governance

The final and all-encompassing component of decentralized AI systems is governance. Who and how will decide what models and agents are allowed or not allows to do? Who owns and steers them? How the alignment and AI explainability is being done?

Decentralized Autonomous Organizations (DAO) explore new frontier of the programmable democracy. Important to note, this is not one project or framework, but rather a whole design space starting from voting, execution, treasury management to the whole organizations that are augmented, enhanced and managed by the AI swarm.

Conclusion

The advent of Artificial Intelligence and autonomous agents represents a significant turning point in human evolution. These technologies have the potential to redefine our lives and society at large, which underscores the need to ensure that access to AI becomes a fundamental right for all.

However, the current centralization of AI ownership and development poses serious risks, including the abuse of power and a slowdown in innovation. In contrast, decentralized AI provides a promising alternative, fostering innovation, ownership and control.

By supporting the growth of decentralized AI, we can ensure a future where AI benefits all of humanity.

Get Involved

Cyber.fund exists to accelerate and ensure a healthy transition to a cybernetic economy. We’re actively investing in decentralized AI as well as other verticals mentioned in this article. If you’re building in the space of decentralized AI, feel free reach out: @sgershuni on Twitter and Telegram, or [email protected]

Thank you to the reviewers for their insightful comments and suggestions, which have greatly improved the quality and accuracy of this post: Artem, Avi, Tom Shaugnessy, Bayram Annakov, Vasiliy Shapovalov, Fabrizio, Sergey Anosov.